this image contains text

A Look at Compression - dp:deepee@demoscene.interstat.net

Introduction:

Compression is a harsh mistress, and useful for enormous data stored with

and required for just about anything. In this tutorial, three different

types of compression will be covered, from relatively different areas.

Run Length Encoding:

Stop, dont skip this section. Although a simple beast, there are plenty

of improvements that can be made to standard RLE that will make it better

for your purposes, and it works well in combination with other algorithms

that have already taken place on the data.

The first step in just about any compression that will usually help the

performance is to take the deltas of the data. This would mean that you

start by storing the value of the first byte, and for every byte after

that, you subtract the next byte from the current byte and store the

result. Say you have the data:

1 2 3 4 5 6 7 8

1 Store the 1

2 Store the deltas:

stored 1 1 1 1 1 1 1 1

As you can see, this can only help the case in things like RLE. Now, the

premise behind RLE is that you store repeated bytes. This can be done by

a variety of methods, as you could store bytes that repeat, words that

repeat, or double words that repeat. To do this, you merely check if the

next character youve read from the stream is the same as the one you had

already read, and if it is, you keep reading ahead until the character no

longer matches. Then, you output something known as a sentinel a byte

character that will signify a repeated sequence followed by the length

and the character itself. What happens when the character is the same as

the sentinel? Simply output the length as 1 and the character.

Now, your mind may ask, Where are these so-called improvements? Well,

here they are:

1 Having a pre-defined sentinel is a bad idea. Scan the data for the

character that occurs the least and use that for your sentinel you

will need to store this in the file or memory somehow to be safe.

2 Make sure you output only sequences of 3 or more. Outputting two

as sentinel 2 character requires 3 bytes, while no compression

character character will take 2 bytes.

3 Dont waste three bytes for the character sentinel issue. Merely

put sentinel 0 and have the decoder of that RLE be prepared to

deal with that.

While not that significant, these can hardly hurt. There are also many

variations you can make at will to make it better yet, although that will

be left up to you. On to the next compression machine, one that will

actually compress things well.

Huffman Compression:

Alas, the performance of RLE is not the best out there. A technique that

works on the frequency of characters was developed by D. A. Huffman about

45 years ago. The technique is simple, in that it creates a binary tree

of the data and uses that to control the representation of characters.

Now, how is this binary tree created? By the frequency of the characters

and their combinations to form the tree. This is best demonstrated by

an example. Lets say we want to encode ABRACADABRA first, we will

tally the number of characters in this string.

A 5

B 2

C 1

D 1

R 2

We need to make a binary tree from this. By far, the easiest method to

accomplish this would be the use of a method that leaves your frequency

node holder as one node, and that node is the root node of the tree. How?

Well, the first step to this would be to sort the frequency node holder

so that the lowest two frequencies are at the front of the holder. Then,

you create a node that has the left and right child as the first and

second item in your holder. For the frequency of the node, you add the

frequency of the left and right child and store it in your newly created

node. Finally, you insert your created node into the list and remove the

the original first and second nodes from the list.

Now, to pair these together. Well, what are the two lowest characters? C

and D. So we now have this for data:

A 5, B 2, C + D 2, R 2

Sort again, and repeat...lets see what are tree will look like, as this

combining work will eventually leave you with one node, a root node, left

in the pile to work with that has all the nodes below us. Lets say it

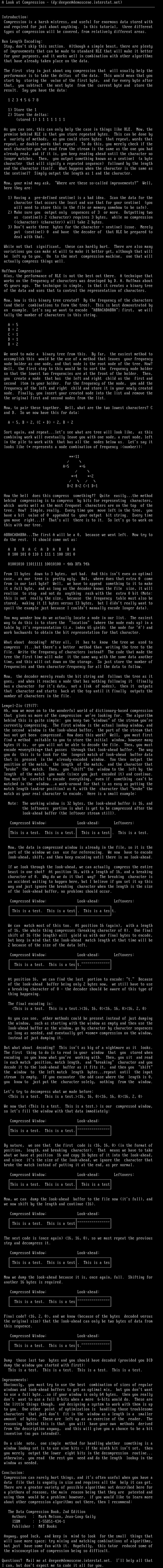

looks like * represents a node combination of frequency :number:

**11

A5 *6

*4 *2

B2 R2 C1 D1

How the hell does this compress something!? Quite easily...the method

behind compressing is to compress by bits for representing characters,

which works well as the most frequent characters are on the top of the

tree. How? Simple, really. Every time you move left in the tree, you

have a bit value of 0 appended to your output bit string. Every time

you move right...1! Thats all there is to it. So lets go to work on

this with our tree.

ABRACADABRA...The first A will be a 0, because we went left. Now try to

do the rest. It should come out as:

A B R A C A D A B R A

0 100 101 0 110 1 111 1 100 101 0

01001010 11011111 10010100 4Ah DFh 94h

From 11 bytes down to 3 bytes, not bad. And this isnt even an optimal

case, as our tree is pretty ugly. But, where does that extra 0 come

from in our last byte? Well, we have to append something to it to make

it a full byte, and as long as the decoder knows the file size, it will

realize to stop and not do anything rash with the extra 0 bit Note:

this is not really the size, because the frequency table must also be

stored, making it 11 bytes versus 13 bytes, but I didnt really want to

spoil the example just because I couldnt manually encode longer data.

You may wonder how do we actually locate a node in our list. The easiest

way to do this is to store the location where the node ends up in a

table by its representation i.e. index 65 yields the node for A and

work backwards to obtain the bit representation for that character.

What about decoding? After all, it has to know the tree we used to

compress it...but theres a better method than writing the tree to the

file. Write the frequency of characters instead! The code that made the

tree the first time will make it the same way with the same data another

time, and this will cut down on the storage. So just store the number of

frequencies and then character:frequency for all the data to follow.

Now, the decoder merely reads the bit string and follows the tree as it

goes, and when it reaches a node that has nothing following it finally

has a character for its data, not a link of nodes, then it outputs

that character and starts back at the top until it finally outputs the

number of characters in the file.

Lempel-Ziv 1977:

Ah, now we move on to the wonderful world of dictionary-based compression

that gives us more of the compression were looking for. The algorithm

behind this is quite simple: you keep two windows of the stream youre

currently compressing. The first window is the actual lookup window, and

the second window is the look-ahead buffer, the part of the stream that

has not yet been compressed. How does this work? Well, you must first

find a method suitable to you to store the initial window, however many

bytes it is, or you will not be able to decode the file. Then, you must

encode *everything* that passes through that look-ahead buffer. The way

you do this is to look for the longest match of look-ahead buffer data

that is present in the already-encoded window. You then output the

position of the match, the length of the match, and the character that

broke the match. Then, you shift the window to the left by the

length of the match you made since you just encoded it and continue.

You must be careful to encode everything, even if something cant be

matched in the window...a work-around for that is as easy as putting the

match length and/or position as 0, with the character that broke the

match as your real character to encode. Here is a small example:

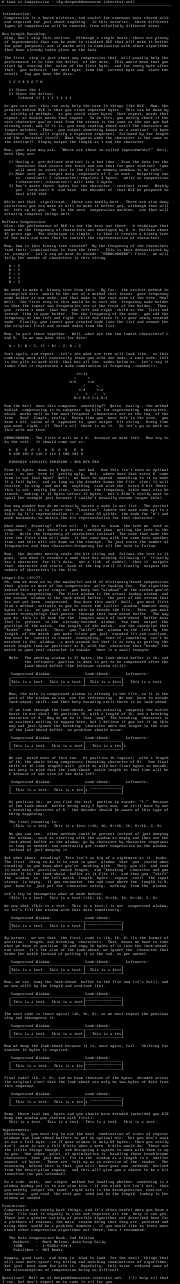

Note: The working window is 32 bytes, the look-ahead buffer is 16, and

the leftovers portion is what is yet to be compressed after the

look-ahead buffer the leftover stream still.

Compressed Window: Look-ahead: Leftovers:

This is a test. This is a test. This is a test. This is a test.

Now, the data in compressed window is already in the file, so it is the

part of the window we can use for referencing. We now have to encode

look-ahead, shift, and then keep encoding until there is no look-ahead.

If we look through the look-ahead, we can actually compress the entire

beast in one shot! At position 16, with a length of 16, and a breaking

character of 0. Why do we do it that way? The breaking character is

an accident waiting to happen here, but I believe if you set it up this

way and just ignore the breaking character when the length is the size

of the look-ahead buffer, no problems should occur.

Compressed Window: Look-ahead: Leftovers:

This is a test. This is a test. This is a test.

We can match most of this too. At position 16 again, with a length

of 16, the whole thing compresses breaking character of 0. One final

shift of 16 the length will yield us with our final bytes to encode,

but keep in mind that the look-ahead match length at that time will be

2 because of the size of the data left.

Compressed Window: Look-ahead: Leftovers:

This is a test. This is a test.

At position 16, we can find the last portion to encode: t. Because

of the look-ahead buffer being only 2 bytes now, we still have to use

a breaking character of 0 - the decoder should be aware of this type of

thing happening.

The final encoding is:

This is a test. This is a test.16, 16, 016, 16, 016, 2, 0

As you can see, other methods could be present instead of just dumping

the window, such as starting with the window as empty and then use the

look-ahead buffer as the window, go by character by character sequences

as long as needed, and eventually get *some* compression on the window,

instead of just dumping it.

But what about decoding? This isnt as big of a nightmare as it looks.

The first thing to do is to read in your window that you stored when

encoding so you know what youre working with. Then, you sit and read

in each match position, match length, and breaking character and you

decode it to the look-ahead buffer as it fits it, and then you shift

the window to the left match length bytes...repeat until the input

stream is empty. If you encounter the odd case where the length is 0,

you know to just put the character solely, nothing from the window.

Lets try to decompress what we made before:

This is a test. This is a test.16, 16, 016, 16, 016, 2, 0

We now that This is a test. This is a test. is our compressed window,

so lets fill the window with that data immediately:

Compressed Window: Look-ahead:

This is a test. This is a test.

By nature, we see that the first code is 16, 16, 0 in the format of

position, length, and breaking character. That means we have to take

what we have at position 16 and copy 16 bytes of it into the look-ahead,

and since its the size of the look-ahead, we ignore the character that

broke the match instead of putting it at the end, as per normal.

Compressed Window: Look-ahead: Leftovers:

This is a test. This is a test. This is a test

Now, we can dump the look-ahead buffer to the file now its full, and

we now shift by the length and continue 16.

Compressed Window: Look-ahead:

This is a test. This is a test

The next code is once again 16, 16, 0, so we must repeat the previous

step and decompress it.

Compressed Window: Look-ahead:

This is a test. This is a test. This is a tes

Now we dump the look-ahead because it is, once again, full. Shifting for

another 16 bytes is required.

Compressed Window: Look-ahead:

This is a test. This is a tes

Final code? 16, 2, 0, and we know because of the bytes decoded versus

the original size that the look-ahead can only be two bytes of data from

this sequence.

Compressed Window: Look-ahead:

This is a test. This is a test.

Dump those last two bytes and you should have decoded provided you DID

dump the window you started with first:

This is a test. This is a test. This is a test. This is a test.

Improvements:

Obviously, you must try to use the best combination of sizes of regular

windows and look-ahead buffers to get an optimal mix, but you dont want

to use a full byte...so if your window is only 64 bytes, then you really

dont want to use a full 8-bits when a mere 6-bits would do. These are

the little things though, and designing a system to work with them is up

to you. One other point of optimization is handling those troublesome

characters that just dont fit in the window as a length in a smaller

amount of bytes. These are left up as an exercise of the reader. The

reasoning behind this is that you will have your own methods derived

from the description anyway, and this will give you a chance to be a bit

inventive no pun intended.

On a side note, one simple method for handling whether something is a

window lookup set is to use nine bits - if the ninth bit isnt set, then

you merely output the lower eight bits in the decompression routine...

otherwise, you read the rest you need and do the length lookup in the

window as needed.

Conclusion:

Compression can rarely hurt things, and its often useful when you have a

data file that is ungodly in size and requires all the help it can get.

There are a greater variety of possible algorithms not described here for

a plethora of reasons, the main reason being that they are patented and

using them would be a problem however, if you would like to learn more

about other compression algorithms out there, then I recommend:

The Data Compression Book, 2nd Edition

Authors : Mark Nelson, Jean-Loup Gaily

ISBN : 1-55851-434-1

Publisher : MT Books

Anyway, good luck, and keep in mind to look for the small things that

will save more space try mixing and matching combinations of algorithms,

but just have some fun with it. Hopefully, this tutor reduced some of

the misconception of compression being so difficult.

Questions? Mail me at deepee@demoscene.interstat.net. Ill help all that

I can, but dont expect me to code it all for you.

Introduction:

Compression is a harsh mistress, and useful for enormous data stored with

and required for just about anything. In this tutorial, three different

types of compression will be covered, from relatively different areas.

Run Length Encoding:

Stop, dont skip this section. Although a simple beast, there are plenty

of improvements that can be made to standard RLE that will make it better

for your purposes, and it works well in combination with other algorithms

that have already taken place on the data.

The first step in just about any compression that will usually help the

performance is to take the deltas of the data. This would mean that you

start by storing the value of the first byte, and for every byte after

that, you subtract the next byte from the current byte and store the

result. Say you have the data:

1 2 3 4 5 6 7 8

1 Store the 1

2 Store the deltas:

stored 1 1 1 1 1 1 1 1

As you can see, this can only help the case in things like RLE. Now, the

premise behind RLE is that you store repeated bytes. This can be done by

a variety of methods, as you could store bytes that repeat, words that

repeat, or double words that repeat. To do this, you merely check if the

next character youve read from the stream is the same as the one you had

already read, and if it is, you keep reading ahead until the character no

longer matches. Then, you output something known as a sentinel a byte

character that will signify a repeated sequence followed by the length

and the character itself. What happens when the character is the same as

the sentinel? Simply output the length as 1 and the character.

Now, your mind may ask, Where are these so-called improvements? Well,

here they are:

1 Having a pre-defined sentinel is a bad idea. Scan the data for the

character that occurs the least and use that for your sentinel you

will need to store this in the file or memory somehow to be safe.

2 Make sure you output only sequences of 3 or more. Outputting two

as sentinel 2 character requires 3 bytes, while no compression

character character will take 2 bytes.

3 Dont waste three bytes for the character sentinel issue. Merely

put sentinel 0 and have the decoder of that RLE be prepared to

deal with that.

While not that significant, these can hardly hurt. There are also many

variations you can make at will to make it better yet, although that will

be left up to you. On to the next compression machine, one that will

actually compress things well.

Huffman Compression:

Alas, the performance of RLE is not the best out there. A technique that

works on the frequency of characters was developed by D. A. Huffman about

45 years ago. The technique is simple, in that it creates a binary tree

of the data and uses that to control the representation of characters.

Now, how is this binary tree created? By the frequency of the characters

and their combinations to form the tree. This is best demonstrated by

an example. Lets say we want to encode ABRACADABRA first, we will

tally the number of characters in this string.

A 5

B 2

C 1

D 1

R 2

We need to make a binary tree from this. By far, the easiest method to

accomplish this would be the use of a method that leaves your frequency

node holder as one node, and that node is the root node of the tree. How?

Well, the first step to this would be to sort the frequency node holder

so that the lowest two frequencies are at the front of the holder. Then,

you create a node that has the left and right child as the first and

second item in your holder. For the frequency of the node, you add the

frequency of the left and right child and store it in your newly created

node. Finally, you insert your created node into the list and remove the

the original first and second nodes from the list.

Now, to pair these together. Well, what are the two lowest characters? C

and D. So we now have this for data:

A 5, B 2, C + D 2, R 2

Sort again, and repeat...lets see what are tree will look like, as this

combining work will eventually leave you with one node, a root node, left

in the pile to work with that has all the nodes below us. Lets say it

looks like * represents a node combination of frequency :number:

**11

A5 *6

*4 *2

B2 R2 C1 D1

How the hell does this compress something!? Quite easily...the method

behind compressing is to compress by bits for representing characters,

which works well as the most frequent characters are on the top of the

tree. How? Simple, really. Every time you move left in the tree, you

have a bit value of 0 appended to your output bit string. Every time

you move right...1! Thats all there is to it. So lets go to work on

this with our tree.

ABRACADABRA...The first A will be a 0, because we went left. Now try to

do the rest. It should come out as:

A B R A C A D A B R A

0 100 101 0 110 1 111 1 100 101 0

01001010 11011111 10010100 4Ah DFh 94h

From 11 bytes down to 3 bytes, not bad. And this isnt even an optimal

case, as our tree is pretty ugly. But, where does that extra 0 come

from in our last byte? Well, we have to append something to it to make

it a full byte, and as long as the decoder knows the file size, it will

realize to stop and not do anything rash with the extra 0 bit Note:

this is not really the size, because the frequency table must also be

stored, making it 11 bytes versus 13 bytes, but I didnt really want to

spoil the example just because I couldnt manually encode longer data.

You may wonder how do we actually locate a node in our list. The easiest

way to do this is to store the location where the node ends up in a

table by its representation i.e. index 65 yields the node for A and

work backwards to obtain the bit representation for that character.

What about decoding? After all, it has to know the tree we used to

compress it...but theres a better method than writing the tree to the

file. Write the frequency of characters instead! The code that made the

tree the first time will make it the same way with the same data another

time, and this will cut down on the storage. So just store the number of

frequencies and then character:frequency for all the data to follow.

Now, the decoder merely reads the bit string and follows the tree as it

goes, and when it reaches a node that has nothing following it finally

has a character for its data, not a link of nodes, then it outputs

that character and starts back at the top until it finally outputs the

number of characters in the file.

Lempel-Ziv 1977:

Ah, now we move on to the wonderful world of dictionary-based compression

that gives us more of the compression were looking for. The algorithm

behind this is quite simple: you keep two windows of the stream youre

currently compressing. The first window is the actual lookup window, and

the second window is the look-ahead buffer, the part of the stream that

has not yet been compressed. How does this work? Well, you must first

find a method suitable to you to store the initial window, however many

bytes it is, or you will not be able to decode the file. Then, you must

encode *everything* that passes through that look-ahead buffer. The way

you do this is to look for the longest match of look-ahead buffer data

that is present in the already-encoded window. You then output the

position of the match, the length of the match, and the character that

broke the match. Then, you shift the window to the left by the

length of the match you made since you just encoded it and continue.

You must be careful to encode everything, even if something cant be

matched in the window...a work-around for that is as easy as putting the

match length and/or position as 0, with the character that broke the

match as your real character to encode. Here is a small example:

Note: The working window is 32 bytes, the look-ahead buffer is 16, and

the leftovers portion is what is yet to be compressed after the

look-ahead buffer the leftover stream still.

Compressed Window: Look-ahead: Leftovers:

This is a test. This is a test. This is a test. This is a test.

Now, the data in compressed window is already in the file, so it is the

part of the window we can use for referencing. We now have to encode

look-ahead, shift, and then keep encoding until there is no look-ahead.

If we look through the look-ahead, we can actually compress the entire

beast in one shot! At position 16, with a length of 16, and a breaking

character of 0. Why do we do it that way? The breaking character is

an accident waiting to happen here, but I believe if you set it up this

way and just ignore the breaking character when the length is the size

of the look-ahead buffer, no problems should occur.

Compressed Window: Look-ahead: Leftovers:

This is a test. This is a test. This is a test.

We can match most of this too. At position 16 again, with a length

of 16, the whole thing compresses breaking character of 0. One final

shift of 16 the length will yield us with our final bytes to encode,

but keep in mind that the look-ahead match length at that time will be

2 because of the size of the data left.

Compressed Window: Look-ahead: Leftovers:

This is a test. This is a test.

At position 16, we can find the last portion to encode: t. Because

of the look-ahead buffer being only 2 bytes now, we still have to use

a breaking character of 0 - the decoder should be aware of this type of

thing happening.

The final encoding is:

This is a test. This is a test.16, 16, 016, 16, 016, 2, 0

As you can see, other methods could be present instead of just dumping

the window, such as starting with the window as empty and then use the

look-ahead buffer as the window, go by character by character sequences

as long as needed, and eventually get *some* compression on the window,

instead of just dumping it.

But what about decoding? This isnt as big of a nightmare as it looks.

The first thing to do is to read in your window that you stored when

encoding so you know what youre working with. Then, you sit and read

in each match position, match length, and breaking character and you

decode it to the look-ahead buffer as it fits it, and then you shift

the window to the left match length bytes...repeat until the input

stream is empty. If you encounter the odd case where the length is 0,

you know to just put the character solely, nothing from the window.

Lets try to decompress what we made before:

This is a test. This is a test.16, 16, 016, 16, 016, 2, 0

We now that This is a test. This is a test. is our compressed window,

so lets fill the window with that data immediately:

Compressed Window: Look-ahead:

This is a test. This is a test.

By nature, we see that the first code is 16, 16, 0 in the format of

position, length, and breaking character. That means we have to take

what we have at position 16 and copy 16 bytes of it into the look-ahead,

and since its the size of the look-ahead, we ignore the character that

broke the match instead of putting it at the end, as per normal.

Compressed Window: Look-ahead: Leftovers:

This is a test. This is a test. This is a test

Now, we can dump the look-ahead buffer to the file now its full, and

we now shift by the length and continue 16.

Compressed Window: Look-ahead:

This is a test. This is a test

The next code is once again 16, 16, 0, so we must repeat the previous

step and decompress it.

Compressed Window: Look-ahead:

This is a test. This is a test. This is a tes

Now we dump the look-ahead because it is, once again, full. Shifting for

another 16 bytes is required.

Compressed Window: Look-ahead:

This is a test. This is a tes

Final code? 16, 2, 0, and we know because of the bytes decoded versus

the original size that the look-ahead can only be two bytes of data from

this sequence.

Compressed Window: Look-ahead:

This is a test. This is a test.

Dump those last two bytes and you should have decoded provided you DID

dump the window you started with first:

This is a test. This is a test. This is a test. This is a test.

Improvements:

Obviously, you must try to use the best combination of sizes of regular

windows and look-ahead buffers to get an optimal mix, but you dont want

to use a full byte...so if your window is only 64 bytes, then you really

dont want to use a full 8-bits when a mere 6-bits would do. These are

the little things though, and designing a system to work with them is up

to you. One other point of optimization is handling those troublesome

characters that just dont fit in the window as a length in a smaller

amount of bytes. These are left up as an exercise of the reader. The

reasoning behind this is that you will have your own methods derived

from the description anyway, and this will give you a chance to be a bit

inventive no pun intended.

On a side note, one simple method for handling whether something is a

window lookup set is to use nine bits - if the ninth bit isnt set, then

you merely output the lower eight bits in the decompression routine...

otherwise, you read the rest you need and do the length lookup in the

window as needed.

Conclusion:

Compression can rarely hurt things, and its often useful when you have a

data file that is ungodly in size and requires all the help it can get.

There are a greater variety of possible algorithms not described here for

a plethora of reasons, the main reason being that they are patented and

using them would be a problem however, if you would like to learn more

about other compression algorithms out there, then I recommend:

The Data Compression Book, 2nd Edition

Authors : Mark Nelson, Jean-Loup Gaily

ISBN : 1-55851-434-1

Publisher : MT Books

Anyway, good luck, and keep in mind to look for the small things that

will save more space try mixing and matching combinations of algorithms,

but just have some fun with it. Hopefully, this tutor reduced some of

the misconception of compression being so difficult.

Questions? Mail me at deepee@demoscene.interstat.net. Ill help all that

I can, but dont expect me to code it all for you.

log in to add a comment.